Uploading binary data in ITK¶

Since every local Git repository contains a copy of the entire project history, it is important to avoid adding large binary files directly to the repository. Large binary files added and removed throughout a project’s history will cause the repository to become bloated, take up too much disk space, require excessive time and bandwidth to download, etc.

A solution to this problem which has been adopted by ITK is to store binary files, such as images, in a separate location outside the Git repository, then download the files at build time with CMake.

A “content link” file contains an identifying Content Identifier (CID). The content

link is stored in the Git repository at the path where the file would exist,

but with a .cid extension appended to the file name. CMake will find

these content link files at build time, download them from a list of server

resources, and create symlinks or copies of the original files at the

corresponding location in the build tree.

The Content Identifier (CID) is a self-describing hash following the multiformats standard created by the Interplanetary Filesystem (IPFS) community. A file with a CID for its filename is content-verifiable. Locating files according to their CID makes content-addressed, as opposed to location-addressed, data exchange possible. This practice is the foundation of the decentralized web, also known as the dWeb or Web3. By adopting Web3, we gain:

Permissionless data uploads

Robust, redundant storage

Local and peer-to-peer storage

Scalability

Sustainability

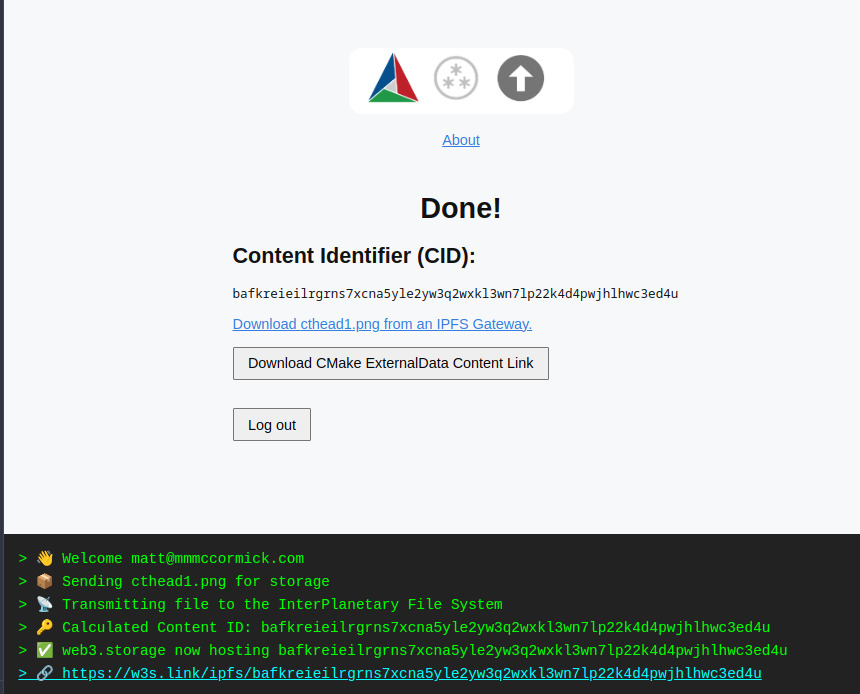

Contributors to the ITK upload their data through a simple web app that utilizes an easy-to-use, permissionless, free service, web3.storage.

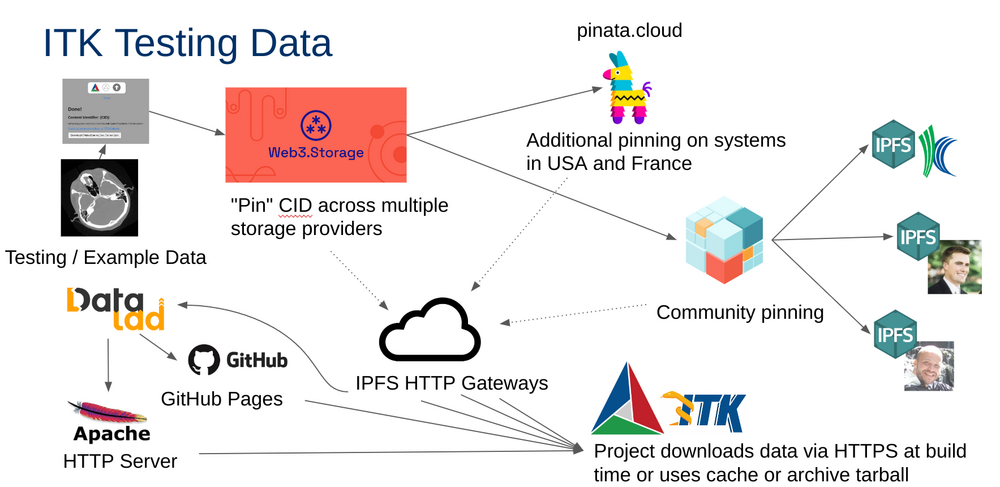

Data used in the ITK Git repository is periodically tracked in a dedicated DataLad repository, the ITKData DataLad repository. and stored across redundant locations so it can be retrieved from any of the following:

Local IPFS nodes

Peer IPFS nodes

Kitware’s IPFS Server

ITKTestingData GitHub Pages CDN

Kitware’s Apache HTTP Server

Local testing data cache

Archive tarballs from GitHub Releases

Testing data workflow. Testing or example data is uploaded to IPFS via the content-link-upload.itk.org web app. This pins the data on multiple servers across the globe. At release time, the data is also pinned on multiple servers in the USA and France and community pinners. At release time, the data is also stored in the DataLad Git repository, served on an Apache HTTP server, and the GitHub Pages CDN. At test time an ITK build can pull the data from a local cache, archive tarball, the Apache HTTP server, GitHub Pages CDN, or multiple IPFS HTTP gateways.

See also our Data guide for more information. If you just want to browse and download the ITK testing images, see the ITKData DataLad repository.

Adding images as input to ITK sources¶

ITK examples and ITK class tests (see Section 9.4 of the ITK Software Guide) rely on input and baseline images (or data in general) to demonstrate and check the features of a given class. Hence, when developing an ITK example or test, images will need to be added to the Git repository.

When using images for an ITK example or test images, the following principles need to be followed:

Images should be small.

The source tree is not an image database, but a source code repository.

Adding an image larger than 50 Kb should be justified by a discussion with the ITK community.

Regression (baseline) images should not use Analyze format unless the test is for the

AnalyzeImageIOand related classes.Images should use non-trivial Metadata.

Origin should be different form zeros.

Spacing should be different from ones, and it should be anisotropic.

Direction should be different from identity.

Upload new testing data¶

Prerequisites¶

web3.storage is a decentralized IPFS storage provider where any ITK community member can upload binary data files. There are two primary methods available to upload data files:

A. The CMake ExternalData Web3 upload browser interface. B. The w3 command line executable that comes with the @web3-storage/w3cli Node.js NPM package.

Once files have been uploaded, they will be publicly available and accessible since data is content addressed on the IPFS peer-to-peer network.

In addition to these two methods, documented in detail below, another

possibility includes pinning the data on IPFS with other pinning services

and creating the content link file manually. The content link file is simply a

plan text file with a .cid extension whose contents are the CID file.

However, the documented two methods are recommended due to their simplicity

and in order to keep CID values consistent.

At release time, the release manager uploads and archives repository data references in other storage locations for additional redundancy.

Option A) Upload Via the Web Interface¶

Use the Content Link Upload tool (Alt Link) to upload your data to the IPFS and download the corresponding CMake content link file.

Option B) Upload Via CMake and Node.js CLI¶

Install the w3 CLI with the @web3-storage/w3cli Node.js package:

npm install -g @web3-storage/w3cli

Login in with your credentials.

w3 login

Create an w3externaldata bash/zsh function:

function w3externaldata() { w3 put $1 --no-wrap | tail -n 1 | awk -F "/ipfs/" '{print $2}' | tee $1.cid }

Call the function with the file to be uploaded. This command will generate the <filename>.cid content link:

w3externaldata <filename>

1 file (0.3MB)

⁂ Stored 1 file

bafkreifpfhcc3gc7zo2ds3ktyyl5qrycwisyaolegp47cl27i4swxpa2ey

Add the content link to the source tree¶

Add the file to the repository in the directory referenced by the CMakeLists.txt script. Move the content link file to the source tree at the location where the actual file is desired in the build tree.

Stage the new file to your commit:

git add -- path/to/file.cid

Next time CMake configuration runs, it will find the new content link. During the next project build, the data file corresponding to the content link will be downloaded into the build tree.